Why Partitioning?

A lot of projects start with a single database where all the data for all tenants is stored in the same table. This works for a while, but as the number of rows of data build up, you start to notice things slow to a crawl. Even tenants that don’t have much data are seeing their performance drag since they are in the same bucket as much larger tenants.

This isn’t that different from trying to find nuts, bolts, and washers in my garage. When I keep them all in a giant peanut butter jar, finding the right size took forever. When someone gifted me an organizer cabinet with lots of little drawers, I collated them by type and size. Now finding what I want is really quick!

There is a similar concept for databases called partitioning or sharding. First you select a partition key. Often, in a multi-tenant solution, the tenant id is a natural choice. Then you make copies of the database for each key value or a range of key values and migrate the matching data into each. There are often other factors at play but partitioning can go a long way in improving performance.

The Cost of Partitioning

This may sound initially simple till you realize that you traded your performance problem for a maintenance problem. How are you supposed to keep the data structures in sync across all of those databases? What if you get in a fractured state when an update script fails on 10% of your databases? If that wasn’t bad enough, you start getting requirements to query data across tenants. Now you have to find some way to run a query across all of your shards and combine the results in some central location.

PostgreSQL Table Partitioning

This problem isn’t new and there have been many tools invented to help facilitate sharding. Earlier this year I was introduced to the concept of table partitioning implemented in PostgreSQL. It is different than the traditional sharding model since you partition at the table level instead of the database level. It also uses inheritance where each partition inherits the structure of the parent table and querying the parent table queries all of the partitions.

For a practical example, lets imagine that you are developing an app for colleges and the data lent itself to being partitioned by school. You would probably create the following tables:

create table "school" (

"id" serial primary key,

"name" text not null

);

create table "student" (

"id" serial,

"school_id" int not null,

"first_name" text not null,

"last_name" text not null,

primary key (id, school_id),

foreign key (school_id) references school(id)

) partition by list("school_id");The “school” table wouldn’t be partitioned since it is your list of tenants, but the tables that depend on it would be. You don’t have to partition every table and sometimes I don’t if the volume of that table for each tenant is pretty low. Every partitioned table needs to have the partition key as a column (e.g. “school_id”) and that column must be part of the primary key.

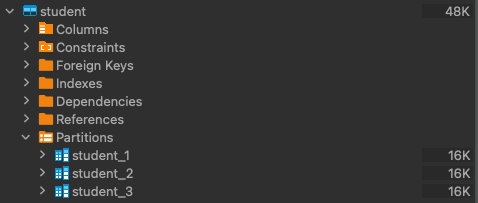

I chose to partition by a single value rather than a range. This allowed me to name the partition after its partition key. So for a couple schools you would see something like the following (I’m using DBeaver):

Generally I create and remove the partitioned tables using triggers on the tenant table. The following is an example trigger for creating the partition. You would need one trigger per table partitioned by school or you could do them all in one function.

CREATE OR REPLACE FUNCTION school_student_partition_generation()

RETURNS TRIGGER AS $$

DECLARE student_partition_table TEXT;

DECLARE school_id INT;

BEGIN

school_id := NEW."id";

student_partition_table := 'student_' || school_id;

EXECUTE 'CREATE TABLE IF NOT EXISTS "' || student_partition_table || '" PARTITION OF "student" FOR VALUES IN (''' || school_id || ''')';

RETURN NEW;

END;

$$ LANGUAGE plpgsql;

CREATE TRIGGER school_student_partition_generation_trigger

BEFORE INSERT ON "school" FOR EACH ROW

EXECUTE PROCEDURE school_student_partition_generation();If you query the “student” table directly then it will query all child tables.

explain select * from student;

QUERY PLAN |

-----------------------------------------------------------------+

Append (cost=0.00..66.45 rows=2430 width=72) |

-> Seq Scan on student_1 (cost=0.00..18.10 rows=810 width=72)|

-> Seq Scan on student_2 (cost=0.00..18.10 rows=810 width=72)|

-> Seq Scan on student_3 (cost=0.00..18.10 rows=810 width=72)|If you query it with a filter on the partition key, then it will only send the query to the relevant tables.

explain select * from student where school_id in (1, 2);

QUERY PLAN |

---------------------------------------------------------------+

Append (cost=0.00..40.33 rows=16 width=72) |

-> Seq Scan on student_1 (cost=0.00..20.12 rows=8 width=72)|

Filter: (school_id = ANY ('{1,2}'::integer[])) |

-> Seq Scan on student_2 (cost=0.00..20.12 rows=8 width=72)|

Filter: (school_id = ANY ('{1,2}'::integer[])) |Also, if you can live within a single partition in your query, then you can see significant performance gains by querying that partition directly. I think that at least one reason it is faster is that it doesn’t have to apply the filter condition to each partition.

explain select * from student_1;

QUERY PLAN |

-----------------------------------------------------------+

Seq Scan on student_1 (cost=0.00..18.10 rows=810 width=72)|One flexibility I appreciate about this solution is that you can have different partition keys per table. This can be helpful if you partition at multiple levels in a hierarchy (e.g. “state” and “school”).

Schema updates are much easier with table partitioning than database sharding. You just make your schema updates to the parent table like you would in a non-partitioned database and the changes propagate to the children. This is also true about adding indexes and constraints.

There are a few downsides but they are manageable. One is that you have to include the partition key in the primary key. So you end up using composite keys everywhere which can bloat the table a bit. Also, you can’t partition materialized views. So you practically can’t use them unless you are willing to take the performance hit of having it query every partition in a table. When I find myself wanting a materialized view, I instead just create a partitioned table in a separate schema and truncate and dump data into it on a schedule.

—

I have really enjoyed using PostgreSQL table partitioning! It alleviates or solves most of my issues with sharding while giving comparable performance gains.

Erik Murphy

Erik is an agile software developer in Charlotte, NC. He enjoys working full-stack (CSS, JS, C#, SQL) as each layer presents new challenges. His experience involves a variety of applications ranging from developing brochure sites to high-performance streaming applications. He has worked in many domains including military, healthcare, finance, and energy.